Creating Vector Database with OpenSearch

A vector database is a certain type of database designed to store and search vectors. Vectors, in other words, embeddings are set if numbers represent points in a multi-dimensional space. In the ML world, embeddings have been around for a long time but vector databases for embeddings have become more popular with the rise of large language models (LLMs).

OpenSearch is one of many databases that support storing and searching vectors. Its k-NN (k-nearest-neighbours) functionality allows us to implement semantic search.

In this tutorial, I’ll walk you through a simple implementation of a vector database with public dataset (movies), and semantic search. The code repository can be found here.

Let’s start with some prerequisites

We need an OpenSearch cluster running. For this tutorial, we’ll run a cluster locally. An alternative can be creating an OpenSearch cluster in your AWS account.

Install Docker

Create docker-compose.yml, copy paste the code below.

version: '3'

services:

opensearch-node1:

image: opensearchproject/opensearch:2.1.0

container_name: opensearch-node1

environment:

- cluster.name=opensearch-cluster

- node.name=opensearch-node1

- discovery.seed_hosts=opensearch-node1,opensearch-node2

- cluster.initial_master_nodes=opensearch-node1,opensearch-node2

- bootstrap.memory_lock=true # along with the memlock settings below, disables swapping

- "OPENSEARCH_JAVA_OPTS=-Xms512m -Xmx512m" # minimum and maximum Java heap size, recommend setting both to 50% of system RAM

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536 # maximum number of open files for the OpenSearch user, set to at least 65536 on modern systems

hard: 65536

volumes:

- opensearch-data1:/usr/share/opensearch/data

ports:

- 9200:9200

- 9600:9600 # required for Performance Analyzer

networks:

- opensearch-net

opensearch-node2:

image: opensearchproject/opensearch:2.1.0

container_name: opensearch-node2

environment:

- cluster.name=opensearch-cluster

- node.name=opensearch-node2

- discovery.seed_hosts=opensearch-node1,opensearch-node2

- cluster.initial_master_nodes=opensearch-node1,opensearch-node2

- bootstrap.memory_lock=true

- "OPENSEARCH_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536

hard: 65536

volumes:

- opensearch-data2:/usr/share/opensearch/data

networks:

- opensearch-net

opensearch-dashboards:

image: opensearchproject/opensearch-dashboards:2.1.0

container_name: opensearch-dashboards

ports:

- 5601:5601

expose:

- "5601"

environment:

OPENSEARCH_HOSTS: '["https://opensearch-node1:9200","https://opensearch-node2:9200"]' # must be a string with no spaces when specified as an environment variable

networks:

- opensearch-net

volumes:

opensearch-data1:

opensearch-data2:

networks:

opensearch-net:Run

$ docker-compose upYou need to run this in the same folder as docker-compose.yml

This file creates a multi-container setup for the OpenSearch cluster with two nodes and OpenSearch Dashboards. It uses port 9200 for OpenSearch API, and 5601 for accessing OpenSearch Dashboards. Which means, once you get your container up and running, you can make API calls to the endpoint 9200, and see the dashboard on localhost:5601. Go to localhost:5601 and login with username: admin, password: admin to check the dashboard.

Below I’ll walk you through the notebook

Install required python libraries

%%sh

pip install opensearch-py==2.4.2

pip install boto3==1.34.29

pip install sentence-transformers==2.2.2 Python version = 3.10.10

2. Load dataset into pandas dataframe. We are using a public dataset for movies. Download here.

import pandas as pd

# The actual file path of the CSV file

file_path = 'tmdb_5000_movies.csv'

# Specifying the columns we want to include in the DataFrame

columns = ['id', 'original_title', 'overview']

# Load the CSV file into a DataFrame with selected columns

df_movies = pd.read_csv(file_path, usecols=columns)

# Remove rows with NaN values

df_movies = df_movies.dropna()

# Display the DataFrame

print(df_movies.head(5))3. Create an OpenSearch client. Opensearchpy SDK allows us to create client and execute operations. Since we are running an OpenSearch cluster locally, the host url is localhost and the port number is 9200.

from opensearchpy import OpenSearch

CLUSTER_URL = 'https://localhost:9200'

def get_client(cluster_url = CLUSTER_URL,

username='admin',

password='admin'):

client = OpenSearch(

hosts=[cluster_url],

http_auth=(username, password),

verify_certs=False

)

return client

client = get_client()4. Get a pre-trained model for embeddings. You can choose any pretrained model, or an API to create embeddings for your text. We are using “all-MiniLM-L6-v2” from SentenceTransformers.

This model is recommended to use for short paragraphs and sentences and it is quite fast. Movie plots are relatively short paragraphs, therefore we chose this one. You can choose any model for your own use case. Most sentence-transformer models, including this one, already apply preprocessing for the text (lemmatization, lowercase, stop word removal etc.). Specific preprocessing is not needed for our dataset. It’s recommended to implement extra preprocessing step only when you have a lot of noise in your sentences, like URLs.

We need the embedding dimension (EMBEDDING_DIM) for the next step.

from sentence_transformers import SentenceTransformer

model_name = "all-MiniLM-L6-v2"

model = SentenceTransformer(model_name)

EMBEDDING_DIM = model.encode(["Sample sentence"])[0].shape[0]5. Create an index on OpenSearch. Indexing is the method by which search engines organise data for fast retrieval. For semantic search, we need to utilize OpenSearch’s KNN functionality.

index_name = "movies"

index_body = {

"settings": {

"index": {

"knn": True,

"knn.algo_param.ef_search": 100

}

},

"mappings": { #how do we store,

"properties": {

"embedding": {

"type": "knn_vector", #we are going to put

"dimension": EMBEDDING_DIM,

"method": {

"name": "hnsw",

"space_type": "l2",

"engine": "nmslib",

"parameters": {

"ef_construction": 128,

"m": 24

}

}

}

}response = client.indices.create(index=index_name, body=index_body)“knn”: True means that for this index, the search engine will use the k-nearest neighbours algorithm on the knn_vectortype property. We define the “embeddings” property as a knn_type. We have to provide dimension for the embeddings, which we already set in the previous step.

We choose the underlying algorithm for KNN, e.g. l2, cosinesimil.

We also choose engine for KNN, nmslib or faiss. Make sure to check the documentation on various combinations of algorithms. Not every combination is possible.

6. Loop over each row in the dataframe and insert embedding to the database. This step shows how to insert one data point at a time with multiple properties to our index. In the next step, we’ll also show you how to do it as a bulk operation.

import pandas as pd

for index, row in df_movies.iterrows():

print(f"Id: {row['id']}, Title: {row['original_title']}, Overview: {row['overview']}")

original_title = row['original_title']

overview = row['overview']

id = row['id']

# Sentence transformer model takes list of documents as input and returns list of embeddings.

embedding = model.encode([overview])[0]

# We are inserting a data point with 3 attribute, "id", "text" and "embedding" as knn_vector type.

my_doc = {"id": id, "title": original_title, "plot": overview, "embedding": embedding}

res = client.index(

index=index_name,

body=my_doc,

id = str(index),

refresh = True

)Alternative: Instead of iterating on the dataframe, we can also insert all embedding at once using bulk operation.

from opensearchpy import helpers

df_movies = df_movies.head(100)

# Get embeddings using the model

embeddings = model.encode(df_movies["overview"])

df = pd.DataFrame({

"id": df_movies["id"].tolist(),

"title": df_movies["original_title"].tolist(),

"plot": df_movies["overview"].tolist(),

"embedding": embeddings.tolist(), # Convert embedding to list for DataFrame,

})

docs = df.to_dict(orient="records")

helpers.bulk(client, docs, index=index_name, raise_on_error=True, refresh=True)7. Send queries. In this step, we’ll get the datapoints which are the most similar to our search query. This is actually a vector search, which powers semantic & similarity search.

An example search query syntax:

""" Example query text """

user_query = "A spy goes on a mission"

""" Embedding the query by using the same model """

query_embedding = model.encode((user_query))

query_body = {

"query": {"knn": {"embedding": {"vector": query_embedding, "k": 3}}},

"_source": False,

"fields": ["id", "title", "plot"],

}

results = client.search(

body=query_body,

index=index_name

)

for i, result in enumerate(results["hits"]["hits"]):

plot = result['fields']['plot'][0]

title = result['fields']['title'][0]

score = result['_score']

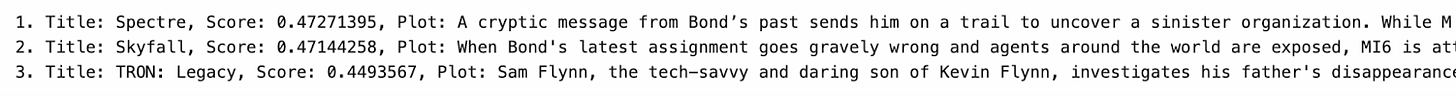

print(f"{i+1}. Title: {title}, Score: {score}, Plot: {plot}")We first create an embedding for our search text, using the same model that we use for the entire database. This is very important, the model used to create the vector database must be the same as we use in the search part. k:3 indicates how many nearest datapoints we want to retrieve. Fields define which properties we want to get. Since embeddings are numerical arrays, they don’t mean anything, so we retrieve the title, plot and the similarity score based on the chosen algorithm (in this case l2).

In this tutorial, we demonstrated how to create a vector database and implement similarity search in a simple way, using movies dataset. With the growing interest in LLMs and prompt engineering, vector databases will become inevitable, and we will see it used not just for LLM applications, but also for recommender systems.