Everyone who knows something about DevOps is familiar with the so-called "DTAP" pipeline (Development > Testing > Acceptance > Production) which can be described as the following:

Development: the most unstable environment where the development of the product happens. Does not have access to production data. Developers have full access to the environment.

Testing: the environment that is close to the target production environment where testing of the product happens. Does not have access to production data. Developers have full access to the environment.

Acceptance: copy of the production environment with access to production data where the customer will verify that the product meets the requirements. Developers should not have direct access to the environment (with some exceptions possible).

Production: the environment where the product gets deployed after the customer accepts it. Developers should never have direct access to production.

How many environments are needed for ML products?

DTAP sounds nice but does not really work for machine learning products. Why? Exactly for the same reason why DevOps is not the same as MLOps, machine learning products differ from software products because data itself is a crucial piece. And we are not talking about data schema, we are talking about distribution and patterns in the data that can be constantly changing and can not be easily reproduced in a synthesized dataset.

Data scientists need read access to production data to be able to do their job, which means that Development and Testing environments from the standard "DTAP" approach are useless for data scientists — they assume no access to production data.

Is it then enough to have only 2 environments, Acceptance, and Production? The acceptance environment would be then used for both development of machine learning models and user acceptance tests, and the production environment would be used for the deployment of the end product. In this case, data scientists would have direct access to the acceptance environment, which is not ideal. Development can then interfere with user acceptance tests running in the same environment: data scientists can accidentally overwrite some files that are needed for user acceptance tests and compromise the results. Having 3 environments is then essential:

Development: the environment where the development of a machine learning product happens. Data scientists have full access to the environment.

Acceptance: copy of production environment with access to production data where the customer will verify that a machine learning product meets the requirements. Data scientists should not have direct access to the environment.

Production: the environment where a machine learning product gets deployed after the customer accepts it. Data scientists should never have direct access to production.

In the case of the MLOps platform that supports certain architecture for machine learning products (covering >80% of all use cases), 3 environments should be enough. For use cases that for some reason require specific architecture, having an extra non-production environment might be a good idea. That environment, however, will not be used by data scientists. This environment is there for machine learning/ cloud/ data engineers to figure out how to tie pieces of architecture together and does not require access to production data.

We can call it MLOps (N)DAP pipeline which stands for Non-production (optional) -> Development -> Acceptance -> Production. Important to mention, Development, Acceptance environments for ML products must have the same security controls as Production, which is not the case in the standard DTAP approach.

Example workflow with Git

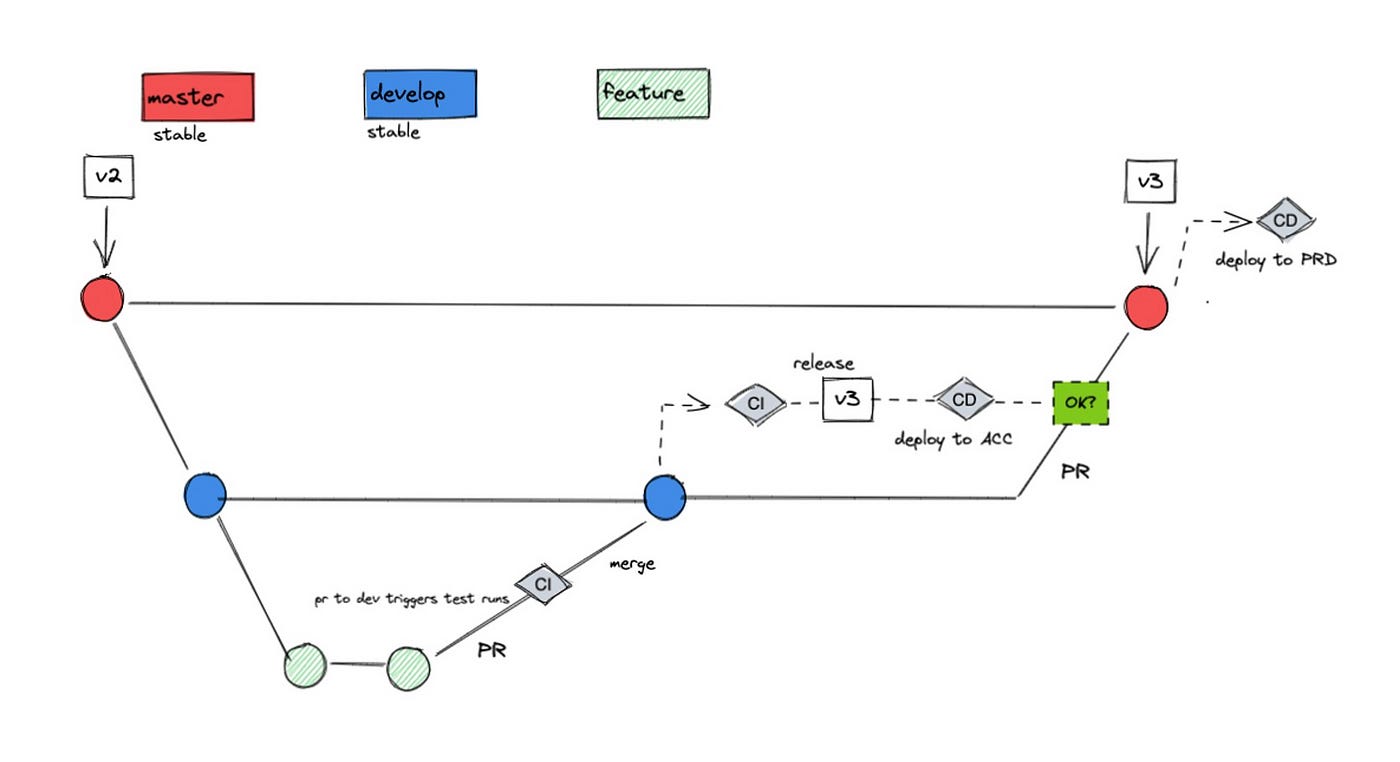

The developer creates a feature branch feature-1 off of the current develop branch.

The developer pushes code to feature-1 and creates a pull request into develop branch

develop branch is a protected branch, and merging into it requires passing unit tests/ code style checks and review by the peers.

When the pull request gets merged into develop branch, running integration tests will be triggered on the development environment.

After integration tests were successful, a new release tag is created pointing to the current state of the develop branch. The machine learning model is deployed to the acceptance environment.

Once testing is complete by the business users/ machine learning engineers/ data scientists, the code from that tag is merged into the master branch and a new release tag is generated.

Merging into the master branch triggers the deployment to the production environment.

This standard git-flow looks simple, but is it really that simple? What kind of unit tests/ integration tests are we talking about? What does it mean for a machine learning model to be deployed?

What is exactly being deployed?

Generally speaking, a machine learning model repository contains a definition for orchestration (it can be a JSON job definition in the case of Databricks, python DAG definition for Airflow), code for training the model, storing model metadata and model artifacts and creating prediction (for batch use case) or deploying model endpoint (for real-time inference use case).

When a pull request is created to the develop branch from a feature branch, unit tests are run to check whether functions defined for data preprocessing/ some utility functions are correct and whether the orchestration definition is not broken. Python code style checks are run: black, flake8.

Then come integration tests and deployment to acceptance and eventually production. How that look depends on your model retraining strategy:

you retrain the model ad-hoc (when you receive new data, which does not happen based on some schedule)

you retrain the model periodically when new data comes in and when it makes sense for your business (weekly/monthly etc)

model is retrained automatically after model drift was detected

When code is merged into develop branch, integration tests can run: all necessary files are copied over to the development environment, and actual code for model training/ model inference runs on a subset of the production dataset.

After successfully running integration tests, all necessary files are copied over to the acceptance environment. It can be chosen to retrain the model in the acceptance environment:

only if the model itself was changed (for example, new features were introduced)

if the model gets retrained periodically anyway: daily, weekly, monthly, retraining can be done "from scratch"

based on the previous state of the model.

How those choices are made is based on the costs of model retraining and gains from better model performance. What must be checked in acceptance is that model inference is happening correctly and that model is integrated with the acceptance version of the end system (for example, a website).

After user acceptance tests were successful, all necessary files are copied over to the production environment. If model retraining is costly, we may want to reuse model artifacts from the acceptance environment.

If we have feature store CI/CD pipeline, how to add in above flow?

What if my intention is not to train model just to improve some code functionality, then how can I skip the deployment?