Getting started with Databricks asset bundles

If you have ever worked with Databricks, you have noticed that there are multiple ways to deploy a Databricks job with all its dependencies. It is very easy to get lost in it.

You may have encountered some of the following common ways to deploy a job on Databricks:

Terraform

Databricks APIs

dbx by Databricks Labs

Databricks asset bundles (DAB)

Terraform and Databricks APIs are "DIY" solutions. If you want to execute a Python script (let's call it main.py) that requires a config.yml file and custom package my_package to run, you will need to make sure all files are uploaded to the workspace or dbfs before the job is deployed.

Databricks asset bundles and dbx are solutions that manage those dependencies for you. However, dbx is not officially supported, and Databricks advises using asset bundles instead (at the moment of publication, in public preview).

In this article, we go through the local setup for DAB, a code example, and the GitHub Actions deployment pipeline

DAB: local setup

First of all, you need to install Databricks CLI version >= 0.205. Follow the instructions from here: https://docs.databricks.com/en/dev-tools/cli/install.html. If you already had an older installation of Databricks, delete that one first.

After CLI is installed, you need to configure Databricks. The easiest way to do it is to create environment variables DATABRICKS_HOST and DATABRICKS_TOKEN.

The example

The folder structure for our example is the following:

├── .github/

│ └── deploy_dab.yml

├── src/

│ ├── my_package/

│ │ ├── __init__.py

│ │ └── example.py

│ └── tests

├── name.yml

├── databricks.yml

├── main.py

├── README.md

└── pyproject.tomlmain.py must be executed on Databricks, and its content is:

from my_package.example import hello_you

from loguru import logger

import yaml

import argparse

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument(

"--root_path",

action="store",

default=None,

type=str,

required=True,

)

args = parser.parse_args()

root_path = args.root_path

with open(f"/Workspace/{root_path}/files/name.yml", "r") as file:

config = yaml.safe_load(file)

hello_you(config['name'])We need my_package and a config file to run the script on Databricks. So my_package must be installed on the cluster, and config.yml must be uploaded to the given location in the Workspace (that location is defined in databricks.yml file).

pyprojects.toml is quite minimalistic here:

[tool.poetry]

name = "my-package"

version = "0.1.0"

description = "Demo package"

authors = ["Marvelous MLOps <marvelousmlops@gmail.com>"]

packages = [

{include = "my_package", from="src"}

]

[tool.poetry.dependencies]

python = "3.12.0"

pyyaml = "^6.0.1"

[build-system]

requires = ["poetry-core"]

build-backend = "poetry.core.masonry.api"config.yml contains the name. main.py is supposed to print out "Hello Marvelous!"

name:

Marvelousdatabricks.yml is the file that defines DAB configuration and gets translated into Databricks job JSON definition:

bundle:

name: demo-dab

artifacts:

default:

type: whl

build: poetry build

path: .

variables:

root_path:

description: root_path for the target

default: /Shared/.bundle/${bundle.target}/${bundle.name}

resources:

jobs:

demo-job:

name: demo-job

tasks:

- task_key: python-task

new_cluster:

spark_version: 13.3.x-scala2.12

node_type_id: Standard_D4s_v5

num_workers: 1

spark_python_task:

python_file: "main.py"

parameters:

- "--root_path"

- ${var.root_path}

libraries:

- whl: ./dist/*.whl

targets:

dev:

workspace:

host: <databricks host>

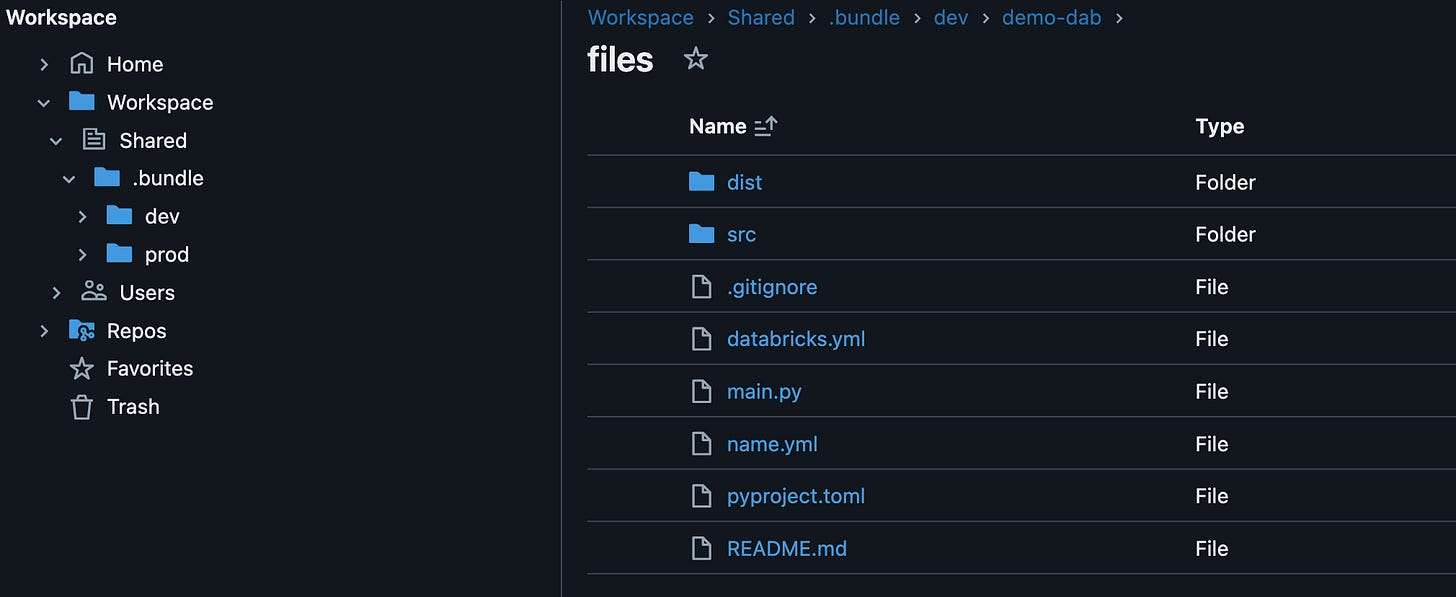

root_path: ${var.root_path}In this example, DAB builds a wheel using poetry build and uploads all the files to the Workspace/Shared/.bundle/dev/demo-bundle location.

DAB limitations

DAB uploads a wheel it builds to the workspace location (this can not be changed). If Unity catalog is not enabled for the workspace, clusters will not be able to access the wheel file from the workspace and will fail to initialize.

If Unity catalog is not enabled for your workspace, you can use PyPI to upload packages there first and refer to them in databricks.yml file. Another option can be to use a docker image for cluster initialization.

The example in this article will only work in the workspace where Unity catalog is enabled.

2. Workspace host value must be filled in databricks.yml file when you use DAB. This is not a good idea: if PAT is used, the combination of PAT and the host gives full access to the workspace, which can be considered as an increased security risk.

As a solution for this problem, the host value can be taken from GitHub Actions secrets, placeholder for the host value in databricks.yml file can be replaced using jinja2. Unfortunately, DAB does not support jinja2 out-of-the-box (compared to dbx), so you need to add some jinja commands to the workflow.

Deploying DAB

When all the files described above are present, deploying DAB is as simple as running the following commands locally:

databricks bundle deployAfter you run that command, you will find out that all the files are at the expected location (a wheel file is under artifacts/.internal/…)

In UI, if you go to Workflows -> Jobs -> view JSON, you can see the full definition of the job. You can notice that python fle points to the location of the bundle, the same goes for the wheel file.

The GitHub Actions workflow is very straightforward to set up. In this example, workflow is triggered on dispatch and contains 3 steps: checkout, setup the Databricks CLI from databricks/setup-cli from a given commit hash, and deploy DAB using PAT defined as SP_token GitHub secret. Note: it is not recommended to use PAT. For Azure, authenticate via OpenID Connect, then use Microsoft Entra ID token instead.

name: "Deploy DAB"

on:

workflow_dispatch:

jobs:

deploy:

name: "Deploy bundle"

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

# Setup the Databricks CLI.

- uses: databricks/setup-cli@7e0aeda05c4ee281e005333303a9d2e89b6ed639

# Deploy the bundle to the "dev" target from databricks.yml

- run: databricks bundle deploy

working-directory: .

env:

DATABRICKS_TOKEN: ${{ secrets.SP_TOKEN }}

DATABRICKS_BUNDLE_ENV: devConclusions

DAB is the only sustainable managed way to deploy jobs on Databricks since it is an officially supported product. It uses Terraform as a backend, so you can also view terraform.tfstate file as part of your bundle.

If you were using dbx before, migrating to DAB would be pretty straightforward. The yml definition for DAB deployment was clearly inspired by dbx.

DAB product team also has thought about providing working templates by running "databricks bundle init" command, which makes it easy to get started.